Data Center Cooling Best Practices

As life continues to shift towards online resources, it’s easy to imagine the world being freed of physical objects. After all, the information available in the books and DVDs on your shelf, the documents in your filing cabinet and the pages of your calendar are all available through a device that fits in your pocket. Even more amazing, most of this information doesn’t even exist on that phone, relying only on a connection to cloud-based network services.

However, even though we no longer see the objects, they still take up physical space, albeit in a different form — racks of servers continuously passing and storing information. At first look, organizing one of these data centers seems simple. All you need to do is line up the server racks, install some cooling treatment and connect your company to the new network.

It’s only once you start planning a data center that you realize what a complex endeavor it truly is. As with any electronic device, these servers and network devices generate heat. But unlike smaller electronic devices, the heat generated by these data centers cannot be managed by a simple fan. Instead, they require significant organization that uses advanced scientific concepts to cool such enormous electronics.

How Data Centers Are Cooled

One of the challenges in arranging a data center is its multi-faceted nature. Capacity, cooling, budgeting, efficiency and power are all major considerations when planning a center, and not addressing these issues leaves your servers at risk of failure.

When designing a data center, your major goals should be to:

- Create technical capacity: Any plan for a data center must account for how much data can be warehoused and processed. Moreover, be sure to account for possible future growth. Even if you don’t initially invest in all of the hardware you may eventually want, your plan will allow for growth without making the layout awkward, cramped or messy.

- Account for thermal needs: These storage facilities generate a tremendous amount of heat, which requires successful planning and resources to dissipate. Cooling malfunctions due to improper computations can cause components to quickly overheat and destroy sensitive electronics. Popular layout solutions include hot-and-cold-aisle layouts and liquid cooling techniques using the best possible airflow to pull heat away from network equipment.

- Apply the most efficient power consumption: Machine operation and cooling techniques require significant power — nearly 2 percent of United States energy consumption is directed to data centers. A successful data center power and cooling design will help to apply cooling techniques and power distribution efficiently, using less energy and reducing the center’s carbon footprint.

Data Center Cooling Methods

There are several methods you can use to cool your data center and prevent the risk of fire or equipment burnout. Data center cooling systems include:

- Computer room air conditioner: A computer room air conditioner (CRAC) is a solution designed for a commercial service server room. A CRAC is a relatively affordable tool that creates cool air by drawing from a refrigerant cooling unit.

- Cold aisle and hot aisle design: The next method is to recycle your internal air by using a hot and cold aisle design to cool it. Hot and cold air aisles arrange cabinets and racks in a line format, with each rack facing the opposing direction. This equipment layout causes the cold and hot air vents to turn toward each other, creating alternate aisles of hot and cold air. The CRAC vents the hot air from the hot aisle and pumps in cool air from the cool aisle.

- Free cooling: Free cooling is a popular, cost-savings method for regions with cold climates, as it allows facilities to vent hot air and then draw cool outside air into the facility.

- Direct-to-chip cooling: Direct-to-chip cooling is an approach that uses liquid cooling. The coolant fluid travels through a series of pipes to a cold plate built into the motherboard. The cold plate disperses the heat for extraction into a loop of chilled water.

Whatever method you choose as your data center cooling solution, always remember to inspect your equipment for temperature-related wear and tear over time. Not sure where to begin? DataSpan provides turnkey data center cooling solutions from the first design layout to the final installation.

How Does Heat Affect My Data Center Cooling Design?

Controlling thermal output from servers is one of the most critical aspects of data center maintenance. Large data centers often use modernized airflow techniques and evaporation-related cooling systems. Overheating data centers can mean higher cooling costs as well as increased risk of hardware damage, data loss or even fires. The most common controlling factor in regulating load temperature is airflow.

Regulating airflow involves several concepts, including:

- Moving heat away from components: The primary function of airflow is to move generated heat away from heated hardware. Ensuring all systems have adequate airflow is essential to providing appropriate cooling.

- Using raised floor tiles: If you have put a hot and cold aisle layout in place, installing raised floor tiles in cold aisles can increase airflow, helping overall cooling. Raised flooring does not generally provide the same benefits in hot aisles.

- Implement data center HVAC: As air flows through the system, it is imperative that warm and cold air do not mix. Allowing the hot air to heat the cold air works against the cooling properties of air-based systems. For this reason, it can be critical to develop either a Hot Aisle Containment System (HACS) or a Cold Aisle Containment System (CACS). By using curtains or barriers to prevent intermixing, aisles retain their temperature differences and run more efficiently. It’s important to note that either hot or cold aisles should be isolated, but shielding both generally will not offer any benefit over shielding only one.

Because of the continued growth of major data centers and concerns over their power consumption, large data centers receive much of the attention. However, smaller data centers, such as a single server in an office building basement, also constitute a significant portion of the data center sector — approximately 40 percent of all server stock — and consume up to 13 billion kilowatt-hours (kWh) annually. Because smaller networks might not require the same intensity of cooling, these systems often do not employ advanced cooling methods, relying instead on standard, inefficient room-cooling techniques.

While it may appear that directly powering a cooling system consumes more energy, electronics often have concentrated areas of heat. Cooling the entire room to the point that the electronics need, especially without redirecting the heat away from the air intakes, is an extremely inefficient model. Cooling systems are designed to address these parts more effectively, targeting precise areas of the rack and using scientific principles to make airflow management more efficient. The cost of energy used for specific cooling systems will very likely end up being less than the power applied to cool the room itself.

Complicated cooling techniques, all methods, can experience breakdowns that imperil the equipment. Blower motors and water pumps can malfunction, stagnating the fluids that transport heat away from components. Improper maintenance can allow dust and dirt to block essential airflow. Sensors can register inaccurate readings. In any of these situations, temperatures can quickly reach dangerous levels and damage hardware, which is why regular monitoring and maintenance is integral to keeping a data center running.

What Is a Hot and Cold Aisle Layout?

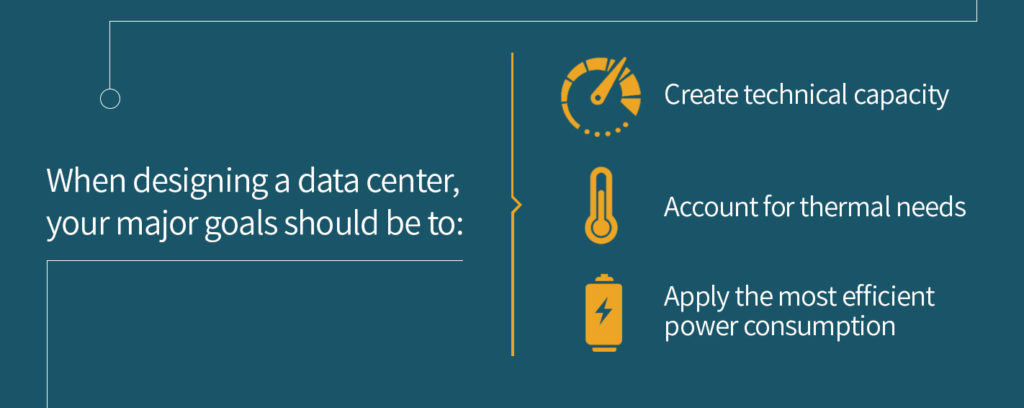

Hot and cold aisles are the most popular cooling layout for data centers. A server is designed to draw in room air through the front, cool necessary components, and then expel the hot air out the back. Understanding this, you can quickly see the problem with putting the front of one server near the back of another — the second server will draw in the already-heated air. This process fails to provide the needed cooling and continues to warm the air in the room as a whole.

To solve this, engineers and technicians arrange rows of servers in hot and cold aisles. Two servers will face each other across an aisle, providing a pathway intended only for cool air, which the server draws in. Then, the back of the machines will form an aisle facing the back of another device as well, expelling hot air from both into the same area. In this way, hardware only draws in cool air from the cold aisle, and the warm air can be cooled adequately before being recirculated to the servers.

Some large data centers can use this excess heat for their own purposes. Rather than considering the hot air a mere byproduct that possibly requires further power to cool, some centers can direct this air through ductwork, heating other parts of the building in colder climates. This provides a natural cooling method and saves energy that would typically be required for general heating purposes.

If the heat is not sent throughout the building, it must be treated in some way before reentering the system. Some systems use fans or condensers to cool the air before recirculation. Others have used geothermal principles, noting that the temperature in the ground stays relatively consistent regardless of the weather on top. By sending the air through inground ductwork, the air is cooled through natural temperature changes, only requiring energy for the blower motor or fan that draws the warm air through the system.

How Does Cable Management Affect My Data Center?

When planning a data center, cable management is often overlooked by data center owners but is an essential consideration. Connecting two points can seem straightforward at first, but there are several concerns to consider.

Even with a comprehensive initial plan, some companies do not take into account where additional hardware might go if they ever need to expand. In these situations, the cables quickly become a web that traps air and prevents it from its intended circulation and cooling patterns. These air dams can cause significant damage, quickly raising temperatures through inefficient movement.

While likely the highest possible risk, air damming is not the only problem that unplanned cabling can cause. Disorganized wiring can make tracking down problems a major issue, costing time and resources when you need to be at your most efficient. Moreover, mistreating cables can make them more susceptible to breaking, decreasing the life of your connections.

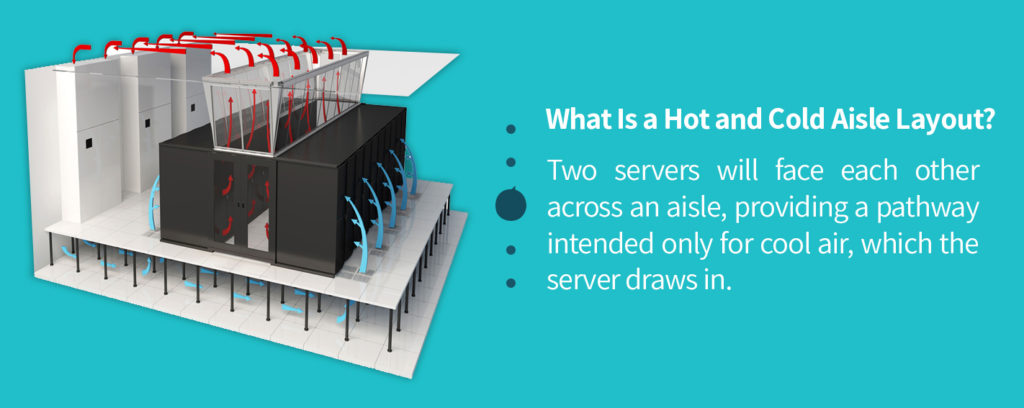

To improve your cabling:

- Bundle your cables: With cable ties or appropriate channeling, cables will follow the same path and keep airways open. If possible, use a structured system in which the wires are all routed together. Unstructured connections, or point-to-point connections, quickly create a tangle of cable that is sure to restrict airflow. From the very beginning stages, your plans should account for how and where you will group your cables.

- Avoid the cold aisle: If your plan uses hot and cold aisles, avoid anything that will clog up your cold air circulation. Allowing hot air to be recycled is essential, but trapped hot air will cool anyway, while trapped cold air will warm up and never serve its airflow purpose.

- Run cables with the air flow: Air dams occur when too many cables run perpendicular to the airflow, blocking air from passing. Logically, one way to prevent this is to run your cables in the direction of the airflow so that the majority of the surface area is not in a position to impede circulation. Using raised flooring can provide further protection for your cables, keeping them out of the way and preventing issues before they start.

- Color code your cables: While this may not directly contribute to cooling, using a color scheme for your cables can save you time and frustration tracking a wire. For instance, knowing which cables are in band or out of band, or which are used for patch data or particular types of uplinks, makes it much easier to trace a cable along its path. With fewer frustrations, your service personnel can spend more time on maintenance and preventing problems.

- Identify your cables: Going a step further, finding some way to label your cables can be extremely helpful, especially if the wire passes through difficult to see locations. Use cables with serial numbers printed on each end or light-up ends that you can easily identify. While color coding is still helpful, this step makes finding the other end of a connection that much easier.

- Use the correct length: While it can sometimes be convenient to grab a longer cable that you are positive will reach connection ports, using an appropriate length of wire will prevent spooling or allowing cables to spread into unwanted areas. The more cable you have laid out, the more potential for messes and air blockages.

- Get rid of extra cables: In short, every cable should have a purpose. If you’ve disconnected hardware at one end of the cable, remove the cable from your network to your system and prevent disorganization and excess. When you add a new component, use only the connections you need at the moment, and return later if you need to connect elsewhere. Never leave an unconnected cable to clutter up space.

How Do I Design Data Center Power Supply?

Powering a data center requires precise equipment and monitoring. To power a data center, you need:

- Uninterruptible power supply: Energy comes into the center and is passed along to an uninterruptible power supply (UPS). The UPS regulates the energy through the use of a rectifier and also stores backup energy in a battery for emergency use.

- Power distribution unit: After the UPS, the energy flows to the power distribution unit (PDU), which converts the current into a useable voltage. This stage also helps with power monitoring.

- Power panel: Next, the energy moves to the power panel, which is a breaker that separates the current into different circuits.

- PDU whip: At the whip, power from the PDU is available through an outline. At this point, users can plug in a cord to use the energy.

- Energy to the racks: The power cords transfer energy from the PDU whip to the racks, which contain the loads.

With the amount of cooling and hardware operation in a data center, it should be no surprise that they demand substantial power.

In recent years, data centers worldwide have used more energy than the United Kingdom.

Data center planners face the energy efficiency paradox — some customers avoid investing in energy-saving equipment upfront because of costs, but by trying to save money, ultimately end up paying more. Aside from the noticeable difference in energy and cooling cost, inefficient equipment can also cause systems to fail more quickly, increasing future costs.

How Does Maximizing Return Temperature Affect Efficiency?

One of the most critical aspects of data center temperature monitoring is maximizing the return temperature. Monitoring air density and temperature can make a significant difference in efficiency. This prevents warm air from heating the cold and draws air through the system more efficiently, possibly cutting energy consumption by up to 20 percent or even requiring fewer cooling mechanisms.

What Does It Mean to Match Cooling Capacity?

Matching cooling capacity combines an awareness of specific needs with the ability to meet those needs more efficiently. The concept is to evaluate the heat in the system, target cooling as directly as possible at the problem areas and use the most efficient cooling strategies for that section. The more localized and focused the cooling, the less air is needed in the system in general, cutting possible waste. Though this type of analysis costs more money up front, it’s easy to see how the increased efficiency will quickly add up.

What Approaches Should I Avoid in My Planning?

When planning a data center, what not to do can be as important as what to do. Often, these decisions come down to trying to cut corners or save money. However, setting up a proper plan is fundamental to securing the longevity and effectiveness of your data center.

Some of the most damaging or inefficient ways to plan your center are:

- Cooling the whole room: As addressed in discussing hot and cold aisles, whole room cooling is inefficient and unproductive. Even if you are a small company with a few racks, appropriate cooling techniques will help minimize cooling costs while also prolonging the life of your equipment. Cooling an entire room wastes unnecessary energy cooling parts of the room that do not need it, and it also contributes to uneven temperature regulation.

- Keeping inconsistent temperatures: Data systems will function at their best when held at consistent temperatures. This allows for more control over the environment and prevents moisture and condensation from building up on the electronics, as sometimes happens with rapid temperature changes. For the best results, use technology that monitors the needs of the equipment and supplies cooling as necessary, which will provide optimal consistency.

- Overcooling: While a consistently cold temperature likely will not damage your machine, running your cooling systems too cold uses extra energy for no added benefit. Check the manufacturer’s temperature recommendations for temperature ranges that work with your data center and keep within limits. Otherwise, you’re paying for energy that you don’t need.

- Skipping on efficiency upfront: While it may seem like getting started for less money is a prudent choice, you’re likely to wear out hardware more quickly and pay to maintain or replace those systems. Moreover, you’ll end up losing those savings to energy inefficiency. Calculate your realistic energy consumption costs and then compare annual savings of efficient hardware to their lower-cost counterparts. Chances are you’ll recover your investment quickly, and you’ll lengthen the life of your servers.

- Using all of your space at once: If you have substantial space, you may feel the urge to fill it with whatever your current data center needs require. However, this can be short-sighted, as you will need to install any additional technology around this. Moreover, spreading your equipment to fill the space makes it more challenging to isolate HACS or CACS, requires longer cables and overall complicates the workspace.

- Implementing patchwork assembly: Be sure to go into the data center with a plan. Seeing rows of racks lined up can look pretty easy to apply, but poor planning quickly leads to crossing cables that form air dams, inefficient space usage, poor air circulation and a host of other issues.

How Can I Contact DataSpan?

Data centers are an invaluable part of our current society, providing interconnected networks that contribute to our lives in countless ways. Few people realize the complex nature of the systems that bring them the results of an Internet search or streaming video, but there is a highly-technical operation supporting the storage and use of that information.

Without proper planning and technology, a series of servers can quickly destroy a budget with ineffective cooling or maintaining services. To ensure that your equipment will last and perform at a top level, use data center best practices.

If you’re looking to plan your data center and would like more information about best practices, contact DataSpan to find out more.